Web crawler development – Get started with WebMagic

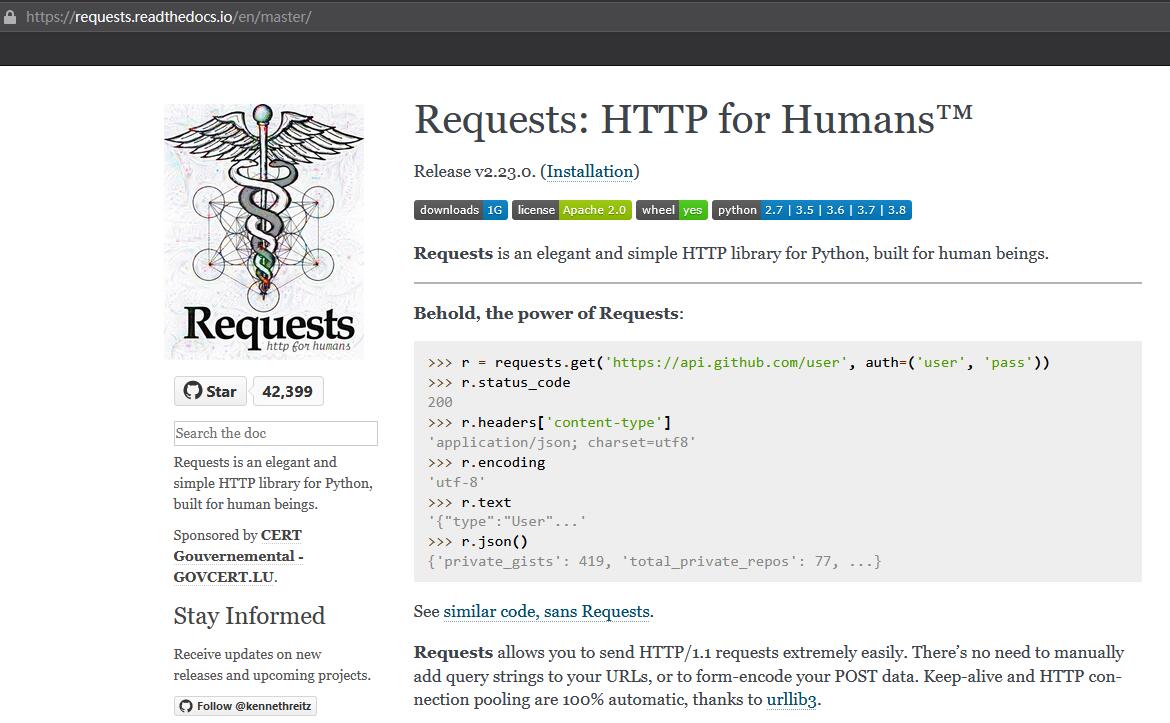

As a web crawler, the first thing you think of is to use python to develop. After all, python is more convenient. The crawler frameworks, such as Scrapy, pyspider, etc., are very popular.

But if Java developers want to do web crawler development,what if you don't want to change the language technology stack? In fact, there are many excellent crawler development frameworks in the Java ecosystem.

Java crawler framework

- Apache Nutch

Nutch is a well matured, production ready Web crawler. Nutch 1.x enables fine grained configuration, relying on Apache Hadoop™ data structures, which are great for batch processing.

Nutch is rich in functions , complete in documentation, and has modules for data fetching, parsing, and storage. It is characterized by large scale.

- Heritrix

Heritrix is the Internet Archive's open-source, extensible, web-scale, archival-quality web crawler project.

Heritrix is relatively mature, with complete functions, complete documents and many online materials. It has its own web management console, including an HTTP server. The operator can operate the console by selecting the Crawler command.

- crawler4j

crawler4j is an open source web crawler for Java which provides a simple API for crawling the Web. Using it, you can setup a multi-threaded web crawler in few minutes.

crawler4j is produced by UCI University (Irvine, California). It is concise, ancient and clear in structure.

- WebMagic

WebMagic is a scalable crawler framework. It covers the whole lifecycle of crawler: downloading, url management, content extraction and persistent. It can simplify the development of a specific crawler.

WebMagic learns from Scrapy, which has a pipeline, and the function is relatively simple.

Webmagic example for Newbie

WebMagic's core feature requires two jars:webmagic-core-{version}.jar and webmagic-extension-{version}.jar. Just add them to your classpath and you are good to go.

WebMagic uses Maven to manage its dependencies, but of cause you can use it without Maven.

Let's use Maven to build an example

1. Add Maven dependency.

Add the following coordinates to your own project (existing project or new one) :

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-core</artifactId>

<version>0.7.3</version>

</dependency>

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.7.3</version>

</dependency>WebMagic uses slf4j-log4j12 as the implemetation of slf4j. If you have your own choice of slf4j implemetation, exclude the former from your dependency.

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.7.3</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>2. Scraping example

The structure of WebMagic is divided into four components: Downloader, PageProcessor, Scheduler, and Pipeline, and they are organized by the Spider. These four components correspond to functions such as download, processing, management and persistence in the life cycle of the crawler. The design of WebMagic refers to Scapy, but the implementation is more java-based.

Spider organizes these components so that they can interact with each other and perform process execution. Spider can be considered as a large container, which is aslo the core of WebMagic logic.

The overall architecture of WebMagic is as follows:

After adding maven dependencies for your project,We just need to write our own PageProcessor to implement the PageProcessor API.Here we take Github for example:

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.Spider;

import us.codecraft.webmagic.processor.PageProcessor;

public class GithubRepoPageProcessor implements PageProcessor {

private Site site = Site.me().setRetryTimes(3).setSleepTime(100);

@Override

public void process(Page page) {

page.addTargetRequests(page.getHtml().links().regex("(https://github\\.com/\\w+/\\w+)").all());

page.putField("author", page.getUrl().regex("https://github\\.com/(\\w+)/.*").toString());

page.putField("name", page.getHtml().xpath("//h1[@class='entry-title public']/strong/a/text()").toString());

if (page.getResultItems().get("name")==null){

//skip this page

page.setSkip(true);

}

page.putField("readme", page.getHtml().xpath("//div[@id='readme']/tidyText()"));

}

@Override

public Site getSite() {

return site;

}

public static void main(String[] args) {

Spider.create(new GithubRepoPageProcessor()).addUrl("https://github.com/code4craft").thread(5).run();

}

}Click the main method, select "Run", you will find that the crawler can work properly!