Roadmap to becoming a web crawler developer in 2020

In today's era of big data, web crawlers have become an important means of obtaining data.

But learning to crwal well is not that simple. First of all, there are too many knowledge points and directions. It is related to computer networks, programming foundation, front-end development, back-end development, app development and reverse, network security, database, operation and maintenance, machine learning, data analysis, etc. It connects some mainstream technology stacks together like a big net. Because of the many directions covered, the things to learn are also very scattered and messy. Many beginners are not sure what to learn, and they don't know how to solve the problem of Anti-Spider in the learning process. In this article, we will make some induction and summary.

Web Crawler Newbie

Some of the most basic websites, often without any Anti-Apider measures. For example, for a blog site, if we want to crawl the whole site, we can crawl down the list page to the article page, and then crawl down the time, author, body and other information of the article.

How to write the code? It's enough to use Python requests and other libraries. Write a basic logic, and then obtain the source code of an article. When parsing, use XPath, BeautifulSoup, PyQuery or regular expressions, or rough string matching. Pull out the desired content, add a text and save it.

The code is simple, just call a few methods. The logic is very simple, several cycles plus storage. Finally, we can see that a piece of article is saved in our own computer. Of course, some students may not be able to write code or are too lazy to write, so they can use some visual crawling tools to crawl down the data through visual point selection.

If the storage is slightly expanded, you can conenct to MySQL, MongoDB, Elasticsearch, Kafka, etc. to save data and achieve persistent storage. It will be more convenient to query or operate in the future.

Anyway, regardless of efficiency, a website without Anti-Spider at all can be done in the most basic way.

When you get here, you say you can crawl? No, it's still far away.

Ajax, dynamic rendering

With the development of the Internet, the front-end technology is also constantly changing, and the data loading method is no longer purely server-side rendering. Now you can see that the data of many websites may be transmitted through the APIs, or even if it's not an API, it's some JSON data, which is then rendered by JavaScript.

At this time, you need to use requests to crawl, which is useless, because the source code of requests crawled down is rendered by the server, and the browser sees the page and the results obtained by requests are different. The real data is executed by JavaScript. The data source may be Ajax, it may be some data in the page, or it may be some ifame pages, etc., but in most cases, it may be obtained by the Ajax API.

So in many cases, you need to analyze Ajax, know how these APIs are called, and then simulate them programmatically. However, some APIs with encryption parameters, such as token, sign, etc., which are not easy to simulate, how to do?

One way is to analyze the JavaScript logic of the website, pull the code in it, find out how these parameters are structured, find out the idea, and then use the crawler to simulate or rewrite it. If you solve it, the direct simulation method will be very efficient, which requires some JavaScript foundation. Of course, some website encryption logic is too good. You may not be able to solve it for a week, and finally give up.

What if you can't solve it or don't want to solve it? At this time, there is a simple and crude way to crawl directly by simulating the browser, such as using Puppeter, Pyppeteer, Selenium, Splash, etc., so that the source code crawled is the real web code, and the data is naturally extracted. At the same time, it also bypasses the process of analyzing Ajax and some JavaScript logic. In this way, it can be seen and crawled without difficulty. At the same time, the browser is simulated, and there are few legal problems.

But in fact, the latter method will also encounter various Anti-Spider situations. Nowadays, many websites will recognize the webdriver, seeing that you are using tools such as Selenium, you can directly kill or do not return data, so you have to solve this problem specifically when you come across this kind of website.

Multi-process, Multi-thread, Coroutine

The above situation is relatively simple to simulate with a single-thread crawler, but the problem is that the speed is slow.

Crawlers are IO-intensive tasks, so they may be waiting for the network to respond in most cases. If the response speed of the network is slow, they have to wait all the time. But this spare time actually allows the CPU to do more. So what should we do? Open more threads.

So at this time, we can add multi-process and multi-thread in some scenarios. Although multi-thread has GIL lock, the impact on the crawler is not that great, so multi-process and multi-thread can improve the crawling speed, the corresponding library has threading and multiprocessing.

Asynchronous coroutines are even more powerful. With aiohttp, gevent, tornado, etc. Basically, you can do as much concurrency as you want, but take it easy. Don't hang up other people's website.

In short, with these, the speed of crawler will be raised.

But speed is not necessarily a good thing. Anti-Spider is definitely coming, blocking your IP address, blocking your account, playing captcha code, and return fake data. So sometimes turtle speed crawling seems to be a solution?

Distributed

Multi-threading, multi-process, and coroutine can be accelerated, but in the end it is still a stand-alone crawler. To truely achieve scale, you have to rely on distributed crawlers.

What is the core of distributed? Resource sharing. Such as crawling queue sharing, deduplication sharing, etc.

We can use some basic queue or components to achieve distribution, such as such as the RabbitMQ, Celery, Kafka, Redis, etc., but after many people try to implement a distributed crawler, there will always be some problems with performance and scalability. Of course, the exception is particularly good. Many enterprises have a set of distributed crawlers developed by themselves, which are closer to their business, this is best way.

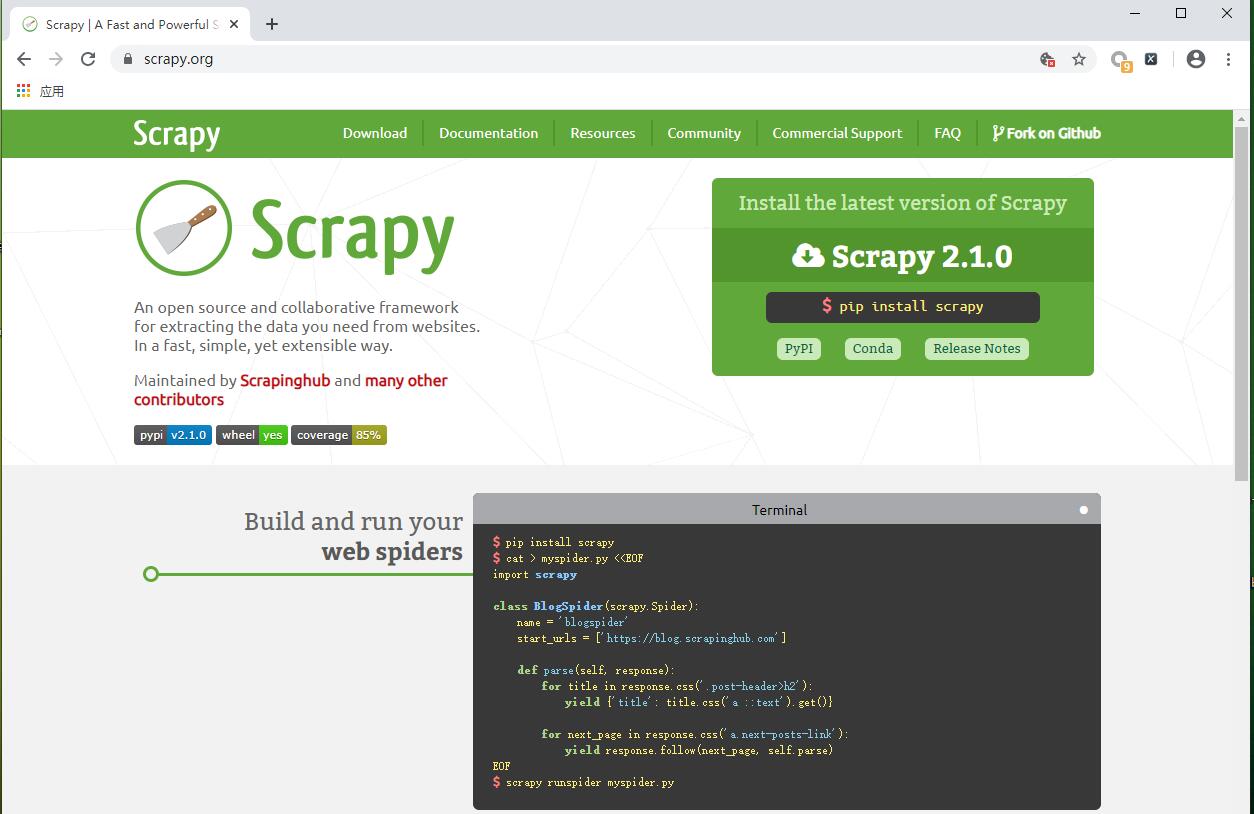

At present, mainstream Python distributed crawlers are still based on Scrapy, docking Scrapy-Redis, Scrapy-Redis-BloomFilter, or using Scrapy-Cluster, etc., they are based on Redis to share the crawl queue, and they will always encounter some memory problems. Therefore, some people also consider connecting to other message queues, such as RabbitMQ, Kafka, etc., to solve some problems, and the efficiency is not bad.

In short, to improve the efficiency of crawling, distribution must be mastered.

Captcha Code

Crawlers inevitably encounter Anti-Spider, captcha code is one of them. To be able to crack Anti-Spider, you must first be able to solve the captcha code.

Now you can see that many websites have a variety of captcha codes, such as the simplest graphic captcha code. If the text of the captcha code is regular, OCR or the basic model library can recognize it. If you don't want to do this, you can directly connect with a captcha human bypass service to do it. The accuracy is still there.

However, you may not see any graphic captcha codes now. They are all behavior captcha codes, there are also many abroad, such as reCaptcha and so on. Some are slightly simpler, such as sliding. You can find a way to identify gap, such as image processing comparison and deep learning recognition. The track is to write a simulation of normal human behavior, add a little jitter and so on. How to simulate after having the track? If you're good at it, you can directly analyze the JavaScript logic of the captcha code, input the track data, and then you can get some encrypted parameters in it. You can directly use them in the form or API with these parameters. Of course, you can also drag it in the way of a simulated browser, or you can get the encryption parameters in a certain way, or directly use the method of simulating the browser to do the login together and crawl with cookies.

Of course, dragging is just a kind of captcha code, as well as text selection, logical reasoning, etc. if you really don't want to do it, you can find a captcha human bypass service to solve it and then simulate it. But after all, spending money, some experts will choose to train deep learning related models, collect data, mark, train, and train different models for different businesses. In this way, with the core technology, it is no longer necessary to spend money to find a captcha human bypass service. After studying the logic simulation of the captcha code, the encryption parameters can be solved. But some captcha codes are hard to figure out, and some of them I haven't figured out yet.

Of course, some captcha codes may pop up due to too frequent requests, which can be solved by changing the IP address.

IP blocking

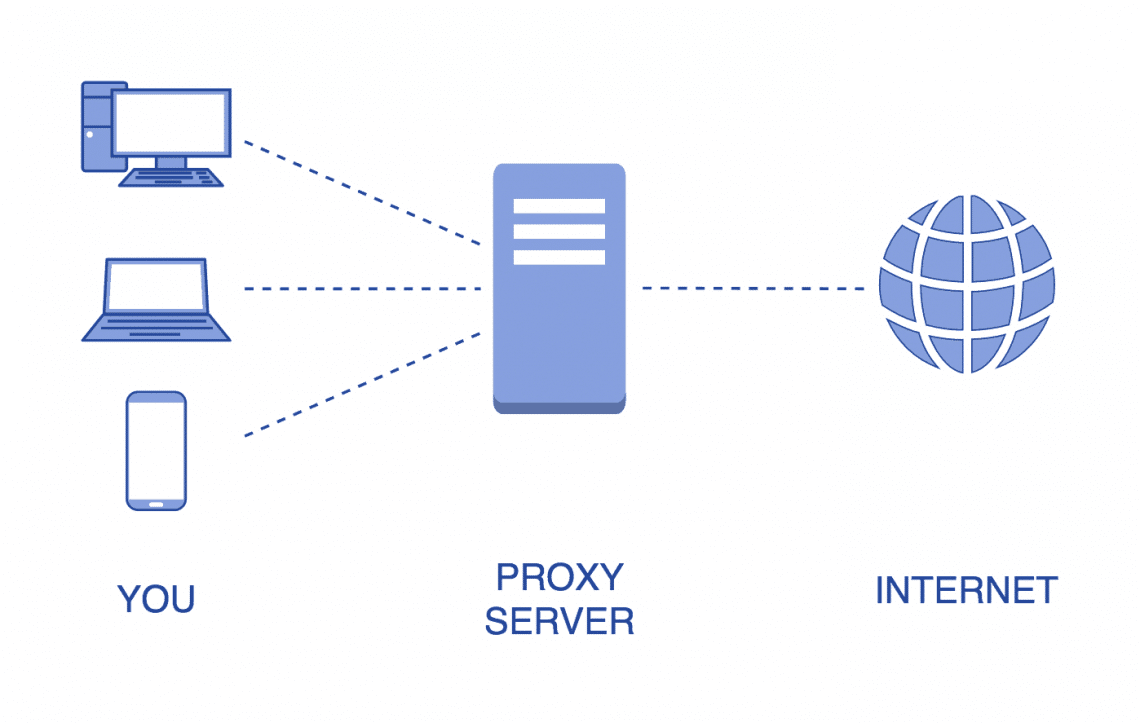

IP blocking is also a headache. The effective way is to change IP by using proxy.

There are many kinds of proxies. There are too many free and fee-based proxies on the market.

First of all, you can use the free proxies on the market, build a proxy pool by yourself, collect all the free proxies on the whole network, and then add a tester to test continuously. The test URL can be changed to the website you want to crawl. In this way, those who pass the test can be directly used to crawl your target website.

The same is true for fee-based proxies. Many merchants provide proxy extraction APIs, which can obtain hundreds of proxies with a single request. We can also connect them to the proxy pool. But this proxy is also divided into various packages. The quality of open proxy, exclusive proxy, etc., and the probability of being blocked are also different.

Some merchants also use tunnel technology to set up proxies, so we don't know the address and port of the proxy. The proxy pool is maintained by them, which is more convenient to use, but the controllability worse.

There are more stable proxies, such as dial-up proxies, cellular proxies, etc., which are more expensive to access, but it can also solve some IP blocking problems to a certain extent.

Blocking Account

Some information can only be crawled by simulated Login. If you crawl too fast, your account will be blocked directly by the website, so you have nothing to say.

One solution, of course, is to slow down the frequency and control the rhythm.

Another way is to look at other terminals, such as mobile page, APP page, WAP page, to see if there is a way to bypass login.

Another better way is diverge. If you have enough account, set up a pool, such as Cookies pool, Token pool and Sign pool. Anyway, no matter what pool, cookies and tokens from multiple accounts are put into this pool. When you use it, you will randomly tak one from it. If you want to ensure that the crawling efficiency remains the same, then the frequency of cookies and tokens corresponding to each account becomes 1/5 of the 100 accounts compared to 20 accounts, then the probability of being blocked It was reduced accordingly.

Weird Anti-Spider

The above mentioned are some of mainstream Anti-Spider methods, and of course, there are a lot of weird Anti-Spider methods. For example, return fake data, return picture data, return disorderly data, return curse data, return data asking for mercy, that all depends specific situation.

You should also be careful about these Anti-Spider methods. I've seen one of them return rm-rf / directly before. If you happen to have a script to simulate execution and return results, the consequences will be imagined.

JavaScript reverse

With the progress of front-end technology and the enhancement of Anti-Spider consciousness of websites, many websites choose to work hard on the front-end, which is to encrypt or confuse some logic or code in the front-end. Of course, this is not only to protect the front-end code from being stolen easily, but also to Anti-Spider. For example, many Ajax APIs take some parameters, such as sign, token, etc., which have been mentioned before. This kind of data can be crawled by selenium and other ways mentioned above, but the efficiency is too low. After all, it simulates the whole process of web page rendering, and the real data may only be hidden in a small API.

If we can actually find the logic in some of the API parameters and simulate the execution with code, the efficiency will be increased exponentially, and the Anti-Spider phenomenon can be avoided to some extent.

But what is the problem? It's hard.

Webpack is on the one hand, the front-end code has been compressed and transcoded into some bundle files, and the meaning of some variables has been lost, which is difficult to restore. Then some sites add obfuscator mechanisms that turn the front-end code into something you don't understand at all, such as string unscrambling, variable hexadecimal, control flow flattening, infinite debugging, console disabling, etc.. Some use WebAssembly and other technologies to directly compile the front-end core logic, then can only slowly cut, although some have a certain skill, but still will take a lot of time. But once we solve it, then all is well.

A lot of companies that hire crawler engineers ask, do you have a JavaScript reverse base, which websites have you hacked? If you've cracked a website they need, you may be hired directly. The logic of each website is different, the difficulty is not the same.

App

Of course, crawler is not only a web crawler, with the development of the Internet era, now more and more companies choose to put data on the App, and even some companies only have App without website. So the data can only be crawled by App.

How to crawl? The basic tool is the winsock expert, using charies, fiddler capture analysis out of the API information, we can directly simulate it.

What if the API has an encryption parameter? One way you can do this is by crawling, such as mitmproxy, which listens directly to API data. You can walk the hooks on the other hand, such as the Xposed can also be got.

How to realize automation when crawling? You can't poke it. In fact, there are many tools. Android's native adb tools are also good. Appium is now a relatively mainstream solution. Of course, there are some other tools that can also be implemented.

Finally, sometimes I really don't want to go through the automatic process, and I want to extract some of the API logic in it, so I have to reverse it. IDA pro, jdax, FRIDA and other tools are useful. Of course, this process is just as painful as JavaScript reverse, and I may even have to read assembly instructions.

Intelligence

If you are familiar with the above, Congratulations! You have more than 80 or 90 percent of the crawler players, of course, dedicated to doing JavaScript reverse, App reverse are standing at the top of the food chain of men, this strictly speaking is not a crawler category.

In addition to the above skills, in some cases, we may also need to combine some machine learning techniques to make our crawlers more intelligent.

For example, many blogs and news articles have a similar page structure and the information to be extracted is similar.

For example, how to distinguish a page from an index page or a detail page? How to extract links to articles on detail pages? How to parse the content of an article page? All of this can actually be calculated by some algorithm.

As a result, some intelligent parsing techniques were developed, such as the extraction detail page, which a friend wrote with the GeneralNewsExtractor, which performed very well.

If I have a demand, I need to crawl 10000 news website data and write XPath one by one? no need. If there is intelligent parsing technology, under the condition of tolerance for certain errors, this is a matter of minutes.

In short, if we can learn this piece, our crawler technology will be even more powerful.

Operation and maintenance

This is also a major piece, crawlers are also closely related to operation and maintenance.

For example, write a crawler, how to quickly deploy to 100 hosts to run.

For example, how to flexibly monitor the running state of each crawler.

For example, how to flexibly monitor the running state of each crawler.

For example, how to monitor the memory usage and CPU consumption of some crawlers.

For example, how to control the timing operation of crawlers scientifically.

For example, there is a problem with the crawler, how to receive timely notification, how to set up a scientific alarm mechanism.

There are different methods for deployment, such as ansible. If you use scrapy, you can use scrapyd, and then with some management tools, you can complete some monitoring and timing tasks. But now I use Docker + Kubernetes, plus DevOps, such as GitHub Actions, Azure Pipelines, Jenkins, etc., to quickly implement distribution and deployment.

As for timing tasks, some of you use crontab, some use apscheduler, some use management tools, some use kubernetes, and I use kubernetes more, timing tasks are also very well implemented.

As for monitoring, there are also a lot of specialized crawler management tools with some monitoring and alarm functions. Some cloud services also come with some monitoring capabilities. I used Kubernetes + Prometheus + Grafana, the CPU, memory and running state were clear at a glance, and the alarm mechanism was also very convenient in Grafana, which supported Webhook, mail, etc.

Kafka and elasticsearch are very convenient for data storage and monitoring. I mainly use the latter, and then cooperate with grafana. Data crawling volume, crawling speed and other monitoring are also clear at a glance.

Conclusion

At this moment, some of the knowledge points covered by the crawler are basically mentioned. Hos to sort out whether it is computer network, programming foundation, front-end development, back-end development, App development and reverse, network security, database, operation and maintenance, machine learning all covered? The above summary can be regarded as the path from newbie to master of crawler. There are actually many points that can be studied in each direction. Each point is refined, and it will be very amazing.