Python Web Crawlers – BeautifulSoup User Guide

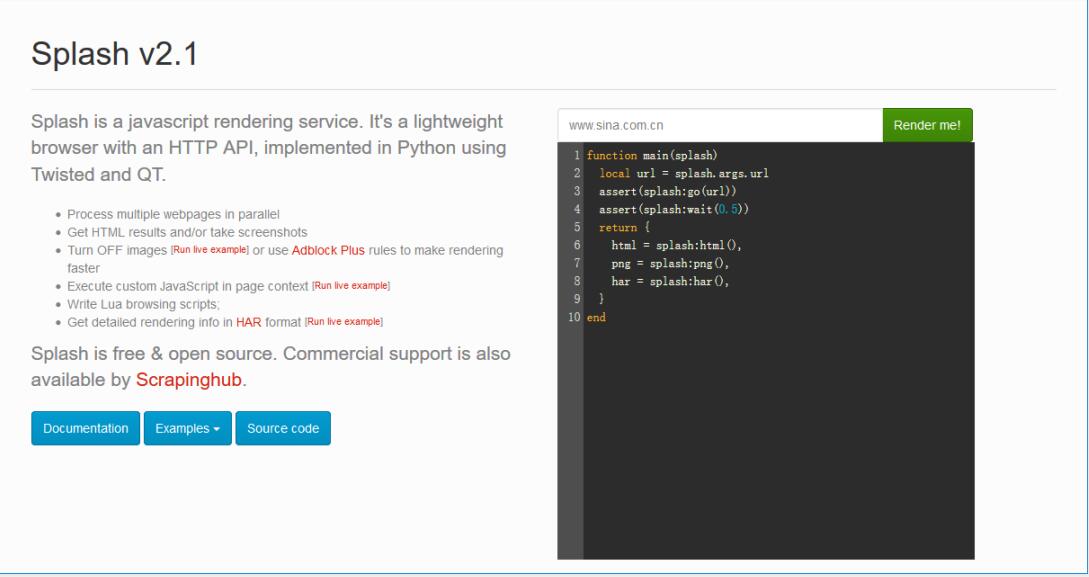

The official documentation is as follows:

BeautifulSoup is a Python library that can extract data from HTML or XML files. It can implement the usual document navigation, search, and modify documents through your favorite converters. BeautifulSoup will save you hours or even days of work.

Note: The following are tested in python3.

Installation

You can install directly using pip:

$ pip install beautifulsoup4BeautifulSoup not only supports HTML parsers, but also supports some third-party parsers, such as lxml, XML, html5lib, but the corresponding libraries need to be installed.

$ pip install lxml

$ pip install html5libGet started

The function of BeautifulSoup is quite powerful, but we only introduce the frequently used functions.

Simple usage

Pass a piece of document into the construction method of BeautifulSoup, you can get a document object, you can pass in a string or a file handle.

>>> from bs4 import BeautifulSoup

>>> soup = BeautifulSoup("<html><body><p>data</p></body></html>")

>>> soup

<html><body><p>data</p></body></html>

>>> soup('p')

[<p>data</p>]First pass in an html document, soup is the instance object of the document. Then, the document is converted to Unicode, and the instances of HTML are converted to Unicode encoding. Then, BeautifulSoup chooses the most suitable parser to parse this document. If you specify the parser manually, BeautifulSoup will choose the specified parser to parse the document. But it is generally best to manually specify the parser and use requests in conjunction with BeautifulSoup. Requests is a library for crawling webpage source code, which is not described here, you can use our blog-"" or requests official document to learn more about the usage of requests.

- What type of document to parse: Currently supported, html, xml, and html5

- Specify which parser to use: currently supported, lxml, html5lib, and html.parser

from bs4 import BeautifulSoup

import requests

html = requests.get(‘http://www.jianshu.com/’).content

soup = BeautifulSoup(html, 'html.parser', from_encoding='utf-8')

result = soup('div')

Types of objects

BeautifulSoup converts complex HTML documents into a complex tree structure, each node is a Python object, and all objects can be summarized into 4 types: Tag, NavigableString, BeautifulSoup, Comment.

- Tag: In layman's terms, it is a tag in HTML, like the div, p above. Each Tag has two important attributes name and attrs, name refers to the name of the tag or the name of the tag itself, attrs usually refers to a class of tag.

- NavigableString: Get the text inside the label, for example, soup.p.string.

- BeautifulSoup: Represents the entire content of a document.

- Comment: The comment object is a special type of NavigableString object, and its output does not include comment symbols.

Examples

Here is an example to show you the common usage of BeautifulSoup:

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

from bs4 import BeautifulSoup

import requests

html_doc = """

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=Edge">

<title>Home-Brief Book</title>

</head>

<body class="output fluid zh cn win reader-day-mode reader-font2 " data-js-module="recommendation" data-locale="zh-CN">

<ul class="article-list thumbnails">

<li class=have-img>

<a class="wrap-img" href="/p/49c4728c3ab2"><img src="http://upload-images.jianshu.io/upload_images/2442470-745c6471c6f8258c.jpg?imageMogr2/auto-orient/strip%7CimageView2/1/w/300/h/300" alt="300" /></a>

<div>

<p class="list-top">

<a class="author-name blue-link" target="_blank" href="/users/0af6b163b687">A Sui rushes forward</a>

<em>·</em>

<span class="time" data-shared-at="2016-07-27T07:03:54+08:00"></span>

</p>

<h4 class="title"><a target="_blank" href="/p/49c4728c3ab2"> With only these six software installed, the work efficiency is very high</a></h4>

<div class="list-footer">

<a target="_blank" href="/p/49c4728c3ab2">

read 1830

</a> <a target="_blank" href="/p/49c4728c3ab2#comments">

· comment

</a> <span> · like 95</span>

<span> · reward 1</span>

</div>

</div>

</li>

</ul>

</body>

"""

soup = BeautifulSoup(html_doc, 'html.parser', from_encoding='utf-8')

# Find all relevant nodes

tags = soup.find_all('li', class_="have-img")

for tag in tags:

image = tag.img['src']

article_user = tag.p.a.get_text()

article_user_url = tag.p.a['href']

created = tag.p.span['data-shared-at']

article_url = tag.h4.a['href']

# You can continue to use find_all() under the searched tag

tag_span = tag.div.div.find_all('span')

likes = tag_span[0].get_text(strip=True)BeautifulSoup is mainly used to traverse the attributes of sub-nodes and sub-nodes. By clicking the attribute, only the first tag in the current document can be obtained, for example, soup.li. If you want to get all the tags, or get more content than a tag by name, you need to use the find_all(), find_all() method to search all tag child nodes of the current tag and determine whether the parameters accepted by find_all() that meet the filter conditions are as follows:

find_all( name , attrs , recursive , string , **kwargs )1. Search by name: The name parameter can find all tags whose name is name, and the string object will be automatically ignored:

soup.find_all("li")2. Search by id: If a parameter with the name id is included, the parameter will be searched as the attribute of the specified name tag when searching:

soup.find_all(id='link2')3. Search by attr: Some tag attributes cannot be used for searching, such as the data- * attribute in HTML5, but you can use the attrs parameter of the find_all() method to define a dictionary parameter to search for tags that contain special attributes:

data_soup.find_all(attrs={"data-foo": "value"})4. Search by CSS: The function of searching tags by CSS class name is very practical, but the keyword class that identifies the CSS class name is a reserved word in Python, and using class as a parameter will cause a syntax error. From the 4.1.1 version of BeautifulSoup. To start, you can search for tags with specified CSS class names through the class_ parameter:

soup.find_all('li', class_="have-img")5. String parameter: You can search the string content in the document through the string parameter. As with the optional value of the name parameter, the string parameter accepts a string, regular expression, list, True. See example:

soup.find_all("a", string="Elsie")6. Recursive parameter: When calling the tag's find_all() method, BeautifulSoup will retrieve all the descendant nodes of the current tag. If you only want to search the direct child nodes of the tag, you can use the parameter recursive = False.

soup.find_all("title", recursive=False)Find_all() is almost the most commonly used search method in .BeautifulSoup. You can also use its shorthand method. The following code is equivalent:

soup.find_all("a")

soup("a")get_text()

If you only want to get the text content contained in the tag, you can use the get_text() method. This method gets all the text content contained in the tag, including the content of the descendant tag, and returns the result as a Unicode string:

tag.p.a.get_text()If you want to see more content, please refer to BeautifulSoup document.